Working With robots.txt File In SeoToaster

The Robots.txt file is an important file to help reference your website on Google, major search engines, and other websites.

Located in the root of your web server - /robots.txt - this file instrucs web robots to crawl your site (or not) and even what folders it should or should not index through a set of instructions called The Robots Exclusion Protocol.

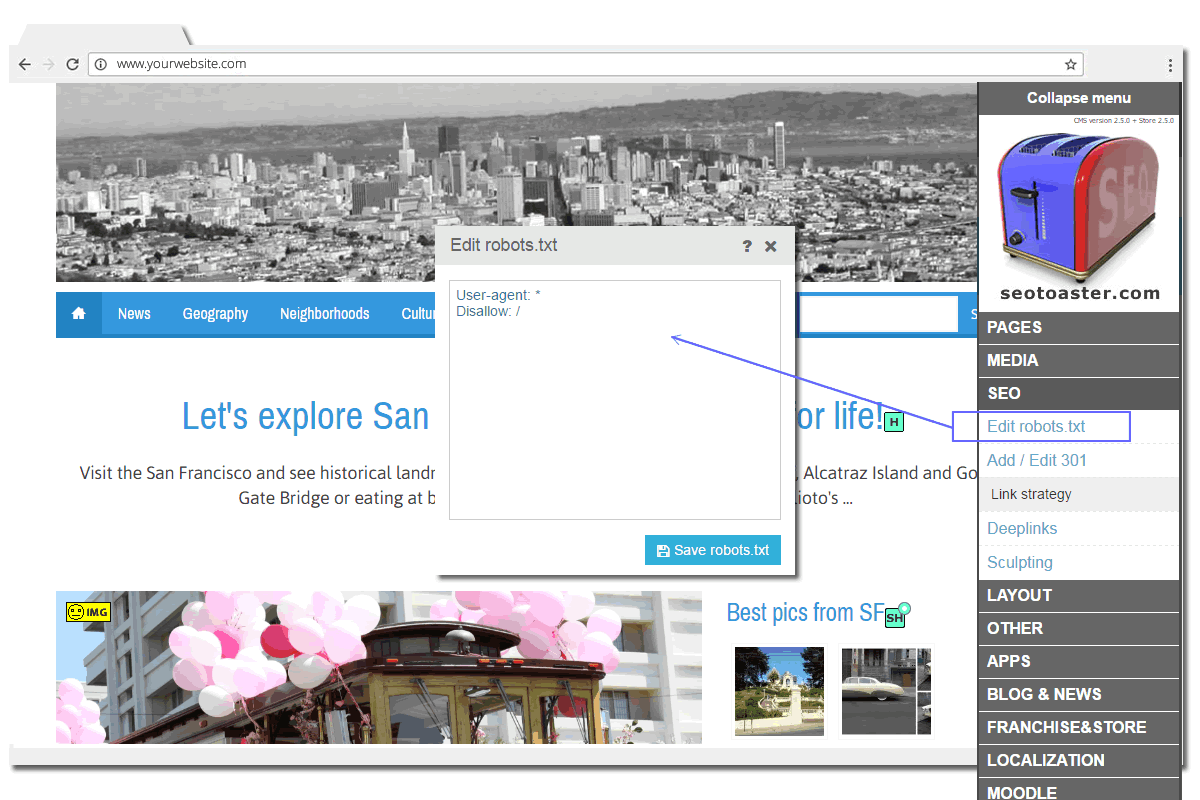

Editing your Robots.txt file with SeoToaster is very easy. In fact we even made a special menu for it in the SeoToaster control panel so you never have to worry on how to access and edit it.

How To Edit Your robots.txt File

How To Customize & Optimize Your robots.txt File

When you'd prefer web robots NOT to crawl specific areas and folder of your website, simply add the instruction "Disallow: " followed by the folder's path relative to your web server.

Tor instance, to prevent robots from crawling the folder http://www.yourwebsite.com/private-stuff/ you would add the following line to your robots.txt code:

Disallow: /private-stuff/

To get your website crawled faster by web robots, you can disable the following folders which are present in your default's SeoToaster install:

Disallow: /system/Disallow: /themes/Disallow: /tmp/

If your website is not indexed by search engines altough it has been crawled several times by web robots, make sure your robots.txt file is present, and that its permissions are properly set. By default it will be the case with all SeoToaster installs, but in case of need you can reset it as follows:

File robots.txt - 644

Depending on your PHP server, if that does not work for you, try the following:

File robots.txt - 666

Rules & Cheat Sheet For robots.txt File

- Only use the parameters

"noindex, follow"if you want to restrict indexation by search engine crawling robots. - Fake or malicious crawlers are free to ignore robots.txt.

- You can use only one

Disallow:line for each specific URL. - You need a separate robots.txt for each subdomain.

- Regular expression characters for exclusion (* and $) only work with Google and Bing.

- Make sure your filename "robots.txt" is all in lowercase, e.g. not "ROBOTS.txt."

- Do not use spaces for query parameters, e.g.

/product-category/ /product-landing-pageis not allowed.

Cheat Sheet for robots.txt File

- Block all web robots & all content

User-agent: *

Disallow: / - Block a specific web robot from specific content

User-agent: Googlebot

Disallow: /no-google/ - Block a specific web robot from crawling a specific page

User-agent: Googlebot

Disallow: /no-google/blocked-page.html - Sitemap Parameter

User-agent: *Disallow:Sitemap: http://www.yourwebsite.com/yoursitemap-folder/sitemap.xml